Designer AI – Can Language Models Help Teach Database Design?

Technologies & Methods Used

- LLMs Tested: GPT-3.5 & 4 (OpenAI)

- Fine-Tuning: GPT-3.5 (custom data)

- Output Format: JSON syntax generator integrated with Designer.io

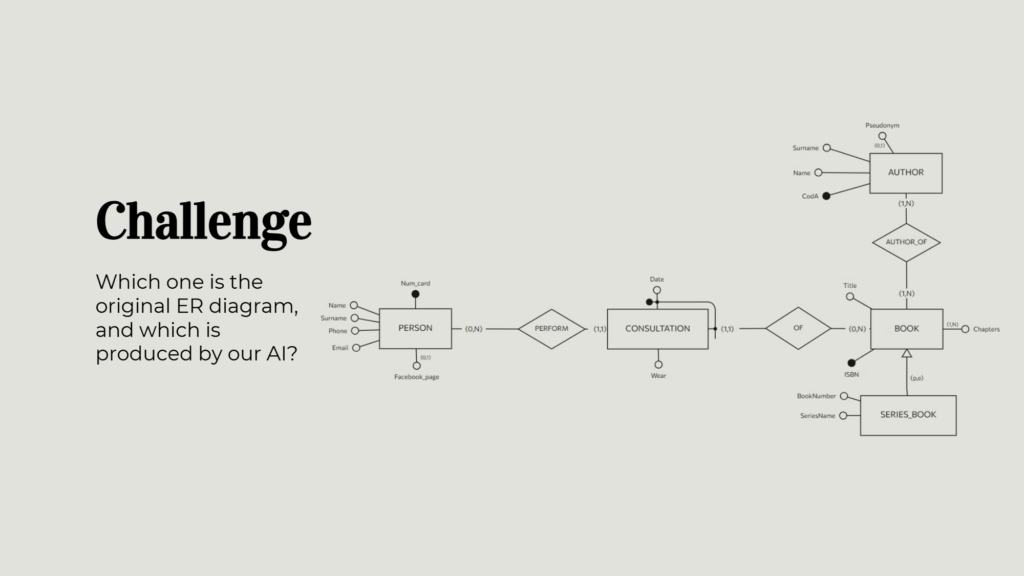

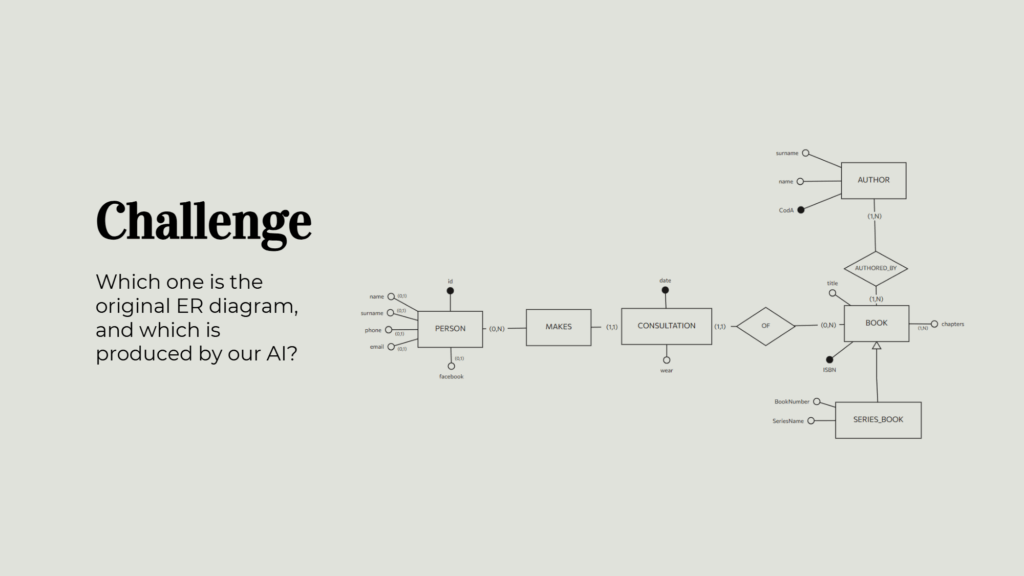

As AI tools become more deeply integrated into education, our Applied Data Science project at Politecnico di Torino aimed to answer a forward-looking question: Can large language models (LLMs) support students in learning database design—specifically, by generating Entity-Relationship (ER) models from natural language instructions?

The goal of our project, “Designer AI,” was to evaluate how reliably LLMs can convert textual descriptions of logical data models into structured ER diagrams—automating a task commonly required in database education.

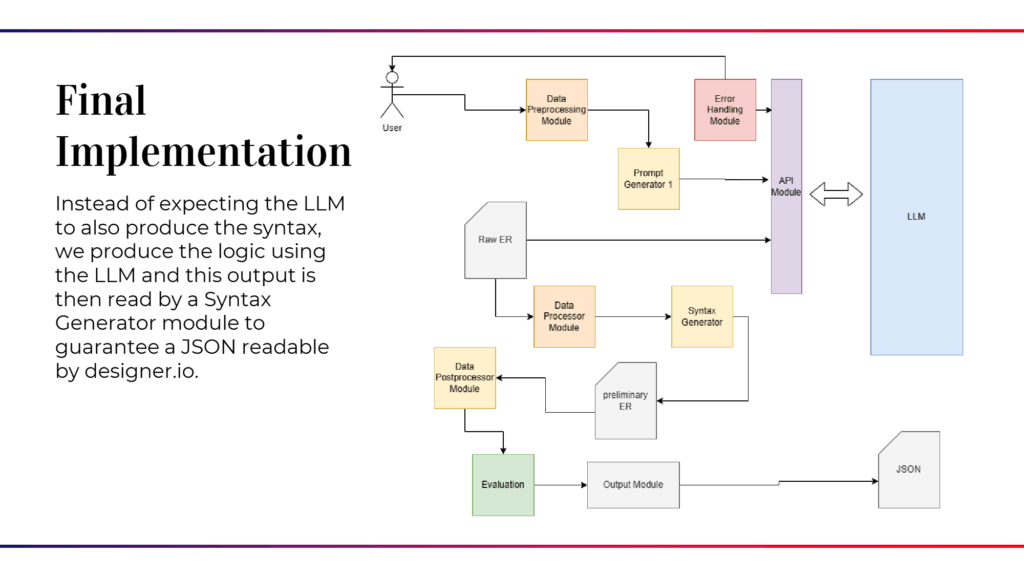

We tested multiple models, including both proprietary (GPT, Claude, Gemini) and open-source (Code Llama, SynthiaIA, Toppy) LLMs. Our pipeline took a novel two-stage approach: instead of asking the model to generate both logic and syntax, we separated concerns. The LLM would first generate the logical structure of the ER model, and then a separate syntax module would convert that into a Designer.io-compatible JSON format.

Key challenges we faced included:

- Data imbalance, where certain ER components appeared far more frequently than others.

- Spatial positioning, initially causing unreadable layouts. We resolved this by implementing a 2D coordinate-based positioning algorithm.

- Evaluation metrics, where we used precision, recall, and F1 scores to gauge the quality of the generated models compared to human-crafted ones.

Despite minimal fine-tuning, GPT-3.5 achieved nearly 85% accuracy in generating correct ER models, and models pre-trained on code (like Code Llama) generally performed better. However, there’s room for improvement—particularly in areas like better prompt engineering, deeper fine-tuning, and expanding the dataset.

This project demonstrated that LLMs are not just useful assistants—they can become effective teaching tools. From schema creation to automated feedback, AI has real potential to enhance how database design is taught and learned.